Directed Acyclic Graphs

A directed graph with no cycles is called a directed acyclic graph.

By definition, they also don't have closed walks (remember that a closed walk is a walk that begins and ends at the same vertex).

Also called DAGs, directed acyclic graphs are helpful for things such as task scheduling:

A schedule for performing tasks specifies which tasks to do at successive steps.

In a scheduling problem, some tasks depend on other tasks being completed beforehand. Because of that, we need to order the tasks. Such ordering is called topological sorting:

A topological sort of a finite DAG is a list of all the vertices such that each vertex

appears earlier in the list than every other vertex reachable from .

| A vertex |

| A vertex |

There is a possibility that there is no minimum vertex in a DAG, but there can be a lot of minimal ones.

Two vertices are said to be comparable if one of them is reachable from the other.

In a set of vertices, when any two of them are comparable, it is called a chain. It implies that the sequence of elements has an order.

The opposite of that, when no two elements are comparable, is the antichain.

So,

When a vertex in a chain is reachable from all the other vertices, it is the maximum element of the chain.

Every

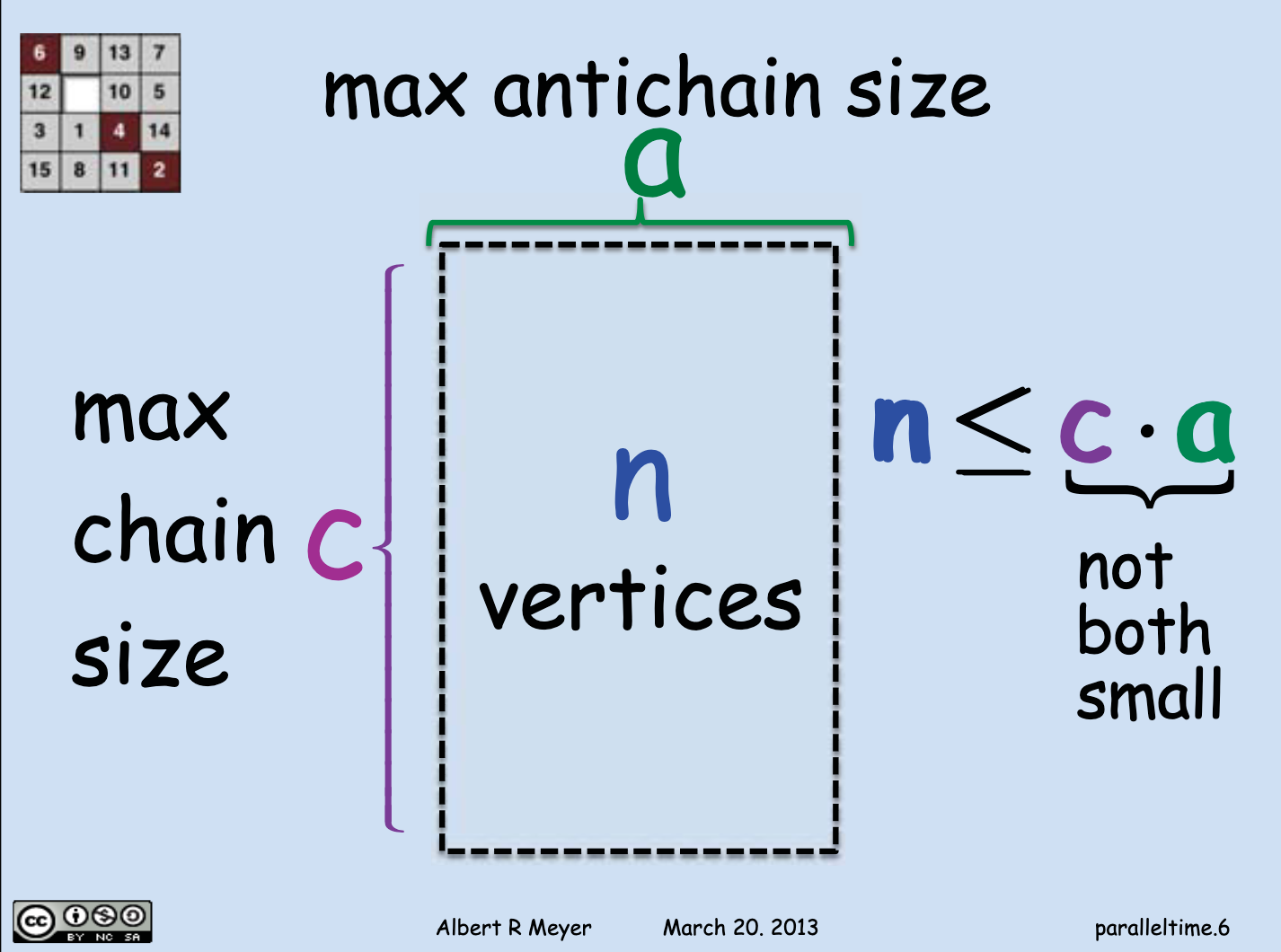

The product of the maximum chain size and the maximum antichain size is greater than or equal to the number of vertices:

This is Dilworth's lemma.

To help visualize it better in our mind's eye, it'd be good to know that the size of the longest chain is called the length, and the size of the longest antichain the width.

Then, this image taken from the slides of the lecture would make more sense:

A partition of a set

So, every element of

Let's imagine a set

We can think of a partition of it into three blocks, such as:

A parallel schedule for a DAG is a partition of its vertices into blocks

So, for example, the vertices in

The block

The number of blocks is the time of the schedule.

The largest chain ending at element

The number of elements in the chain that are less than

So, in a parallel schedule, before task depth(a) steps.