Conditional Probability

What is the probability that you own a cat, given that you have just bought a cat food?

That's conditional probability, the probability that some event occurs given certain information about it.

Let's suppose that a friend rolls a fair die, and we want to know the probability of rolling 1. The answer is obvious, we have 6 possibilities, and the probability of rolling 1 is

But, now let's say that our friend says that she has rolled an odd number. Now, what's the possibility of rolling 1?

Now that we have our number of possibilities down to three (it can only be 1, 3, or 5), we know that the probability of rolling 1 is

What it shows is that "knowledge" changes probabilities.

If you remember the tree model example in the previous section, it might be recognizable that what we were doing at the last step is using the product rule:

The

So,

Since we can't divide by

Conditioning on

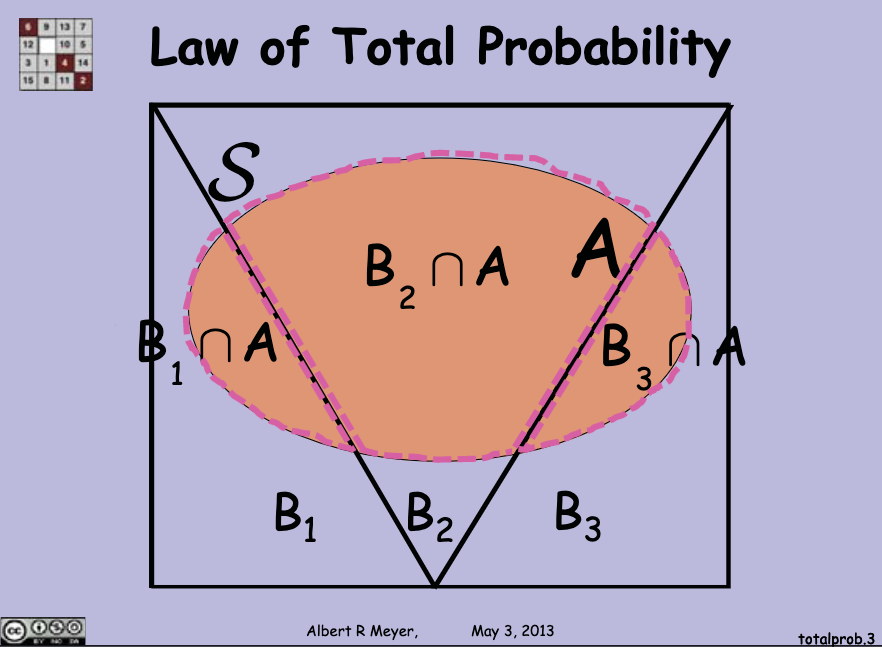

Let's take a look at what is called the law of total probability.

It is a law for reasoning about probability by cases. If you remember from the (Two) Proof Methods, one of them was Proof by Cases. Breaking up a complicated problem into cases is indeed useful, now let's see how it can be helpful when it comes to probability.

So, let's say that in a sample space

Since

We can add probabilities of those intersections to find the probability of

Remember the product rule defines an intersection, so we can do a little substitution:

Okay, now it's time to define it better:

If

is a disjoint union of , then:

Now, let's look at the famous Bayes' Law.

For example, let's say that two friends are at a café. There are only two options: either coffee or tea (that's a lousy café), and one of them is drinking coffee. What's the probability that both are drinking coffee?

Before using the Bayes' theorem, let's first enumerate all the possibilities.

There are three possibilities, given that one of them is drinking coffee: CC (both are drinking coffee), CT (the first one is drinking coffee and the other is drinking tea), TC (the first one is drinking tea and the other is drinking coffee).

It's obvious that the probability of both of them drinking coffee is

But, let's now use Bayes' rule.

Let's say that

We want

The formula is

The only thing left is to apply the formula:

So, the probability of both of them drinking coffee given that one of them does is

*Not one of them drinking coffee is that they are drinking tea with a

One thing to note is that the Bayes' theorem allows us to calculate the probability of a prior event given the result of a later event.

Now, let's look at another example from the practice questions of the lecture:

Let

be the event that Albert is giving the lecture.

Letbe the event that Louis goes to the lecture.

and . The probability that Louis goes to lecture given that Albert is giving the lecture (

) is . We want to know the probability of Albert giving the lecture given that Louis goes to lecture, that is,

.

We have all that we need, so let's plug them into the formula:

What we have as a result is

One more entertaining example from the practice questions.

This weekend it will rain with probability

, be sunny with probability , and hail baseballs otherwise. If it rains, then there is a 50% chance that I will see a crocodile. If it is sunny, then crocodiles will hide themselves from me with probability . If it hails, then I will see a crocodile with complete certainty. What is the probability I see a crocodile this weekend?

Now, let's look at all the probabilities of seeing a crocodile first.

We have one given that it rains, one given that it is sunny, and one given that it hails.

If it rains (which has a

If it is sunny (which has a

If it hails (which has a

All we have to do is to add them up together:

We have the result