Expectation

The expected value of a random variable is the average value of where the values are weighted against their probabilities.

So, the expectation of is the sum of all possible values times the probability of :

It sounds a bit complicated, but let's look at an example that's given in the lecture.

Let's say that we pick a number beforehand, and we roll a dice three times. If we don't get the number we choose, we lose 1 dollar. If the number we choose comes up only once, we win 1 dollar. If it comes up twice, we win 2 dollars, and if all three rolls ends up with our number, we win 3 dollars.

Looking at the probability of each of these three cases, the probability of not getting the number we choose is , which is .

The probability of getting our number once is .

(Note that the order doesn't matter, we're looking at all possible sequences, that's why it is 3 choose 1 with possibility of one of them being our number and the rest different).

The probability of getting our number twice is similar: .

Finally, the probability of getting our number all three times is: .

Let's see what we have for now:

| number of matches |

probability |

dollars won |

| 0 |

|

-1 |

| 1 |

|

1 |

| 2 |

|

2 |

| 3 |

|

3 |

So, if we play 216 games, we expect to never get our number 125 times. We expect to get our number once 75 times, get it twice 15 times, and get it three times only once.

So, on average, what we gain is:

cents

That's a negative! Which means that this is not a fair game, we're losing 8 cents on average.

Which means, we expect to lose 8 cents each time we play.

Expectations can be defined alternatively:

So, it is the sum over all the possible outcomes in the sample space of the value of the random variable at that outcome times the probability of that outcome.

In the example, all the possible outcomes in our sample space are the dollar values: -1, 1, 2, or 3. For each of them, we already calculated the probabilities, so the only thing is to multiply each and add them together. Which we did, and find that it was .

This expected value (which was in our example) can also be called the mean value, or just mean, or expectation.

Let's look at another example from the practice exercises.

We have a dice that lands on an even number with probability . It lands on the values with equal probability. Let's find the expectation of it.

We have 3 even numbers (2, 4, 6), and 3 odd numbers (1, 3, 5) it can land on.

The probability that we get an even number is .

The probability of we get an odd number is .

So, what we'll do is to sum up each value with its probability:

, which is equal to .

The expectation of a binomial distribution () is .

Let's look at an example.

All of our coins are fair, and we flip 200 of them. (Or, we flip a fair coin 200 times).

We want to know the expected number of heads.

Well, is 200, and is . Multiplying them, we have 100.

So, the expected number of heads is 100.

Conditional expectation is defined as:

It is the sum over all possible values that might take of the probability that takes that value, given .

An example: let's say we roll a dice. Let be the probability of getting an even number (one of 2, 4, or 6); let be the probability of getting a prime number (one of 2, 3, or 5).

Expectation of given (expectation of getting an even number given that it is a prime number) is .

The law of total expectation is similar to the law of total probability in the sense that it is helpful to reason by cases.

It is defined as:

Let's see how we can use it to calculate the expectation of getting heads in times of flipping a coin.

Let be the expected number of heads in flips.

Then, if the first flip is a head, the expected number of heads is now . So, we have head and the remaining expectations.

On the other hand, if the first flip is a tail, is going to be . Because it wasn't heads, what we can look for is the remaining expectations.

These are two cases.

Now, let be the probability of a head, and be the probability of a tail.

By total expectation:

is , so replacing it, we get

Simplifying it further:

It all simplifies to .

Look what a beautiful thing we have: is defined by itself, it is a recursive formula!

So, we need to subtract from , and add a (rather, ).

Going further, ,

So, .

Therefore, the expectation of the number of heads in 1 flip, is just , which is just the probability of getting a head in a flip, which is .

The expected time to failure is just the answering how long we have to wait for something.

Let's say we want to get a tail this time, so head will be indicating failure.

Then, the expected time to failure in this case is how long until a head comes up.

Let be the probability of getting a head (), let be the probability of getting a tail, and be the number of flips until we get the first head.

The probability of getting a head on the first flip () is just .

The probability of getting a head on the second flip () is going to be because it means that our first flip resulted in tails.

Note: .

The expected number of flips before we get a head is :

Why?

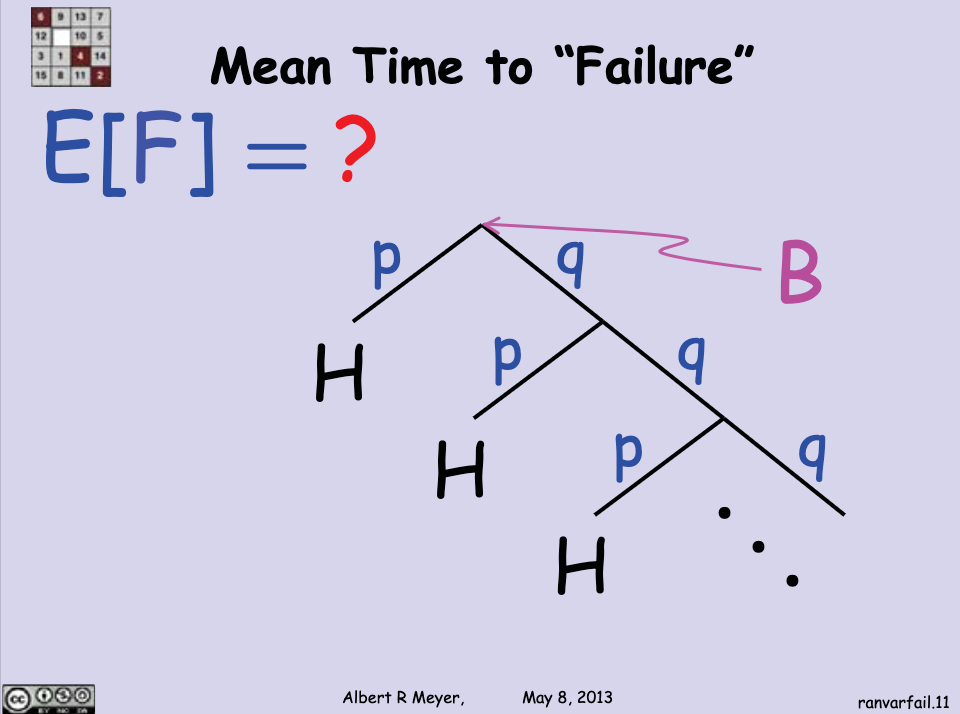

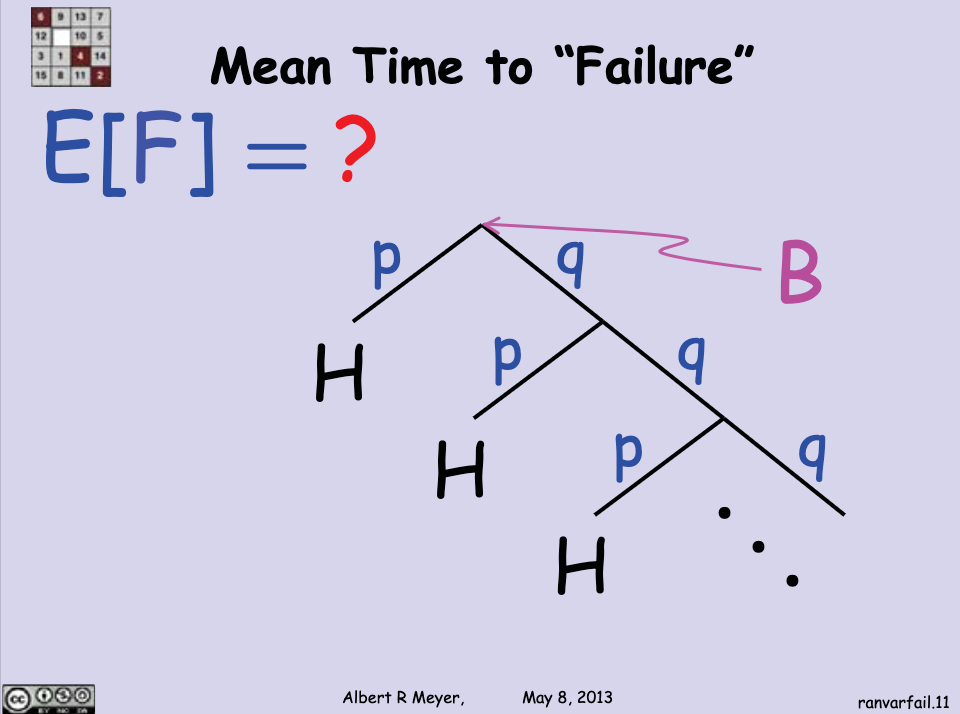

Let's look at this tree that we're going to call that helps us see it:

Branches correspond to the act of flipping the coin, so the number of times until we get a head as a result can be counted by following the branches.

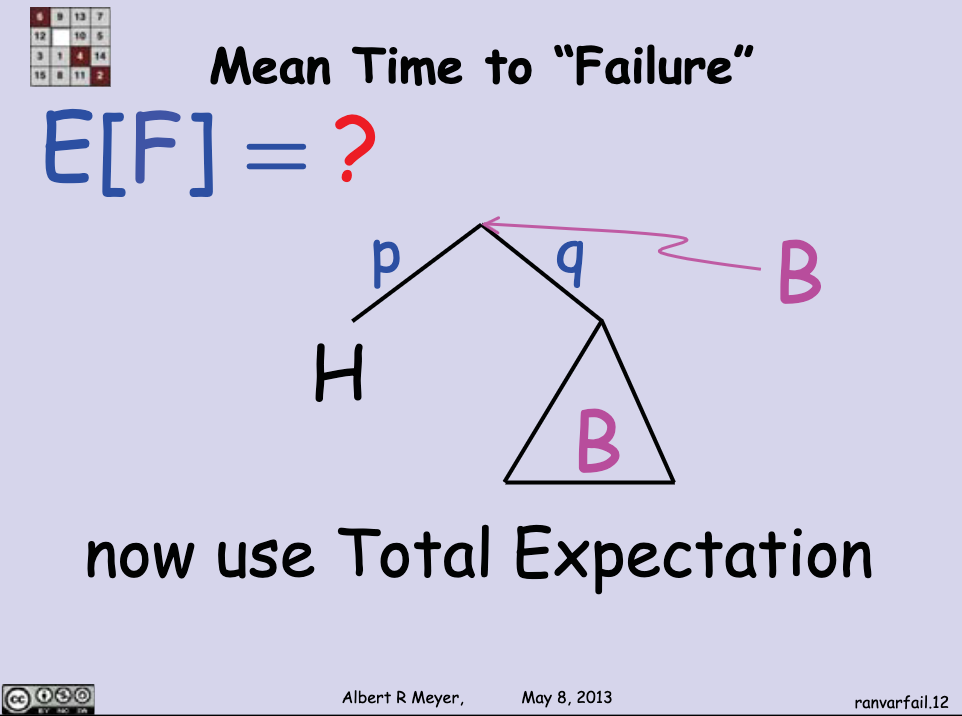

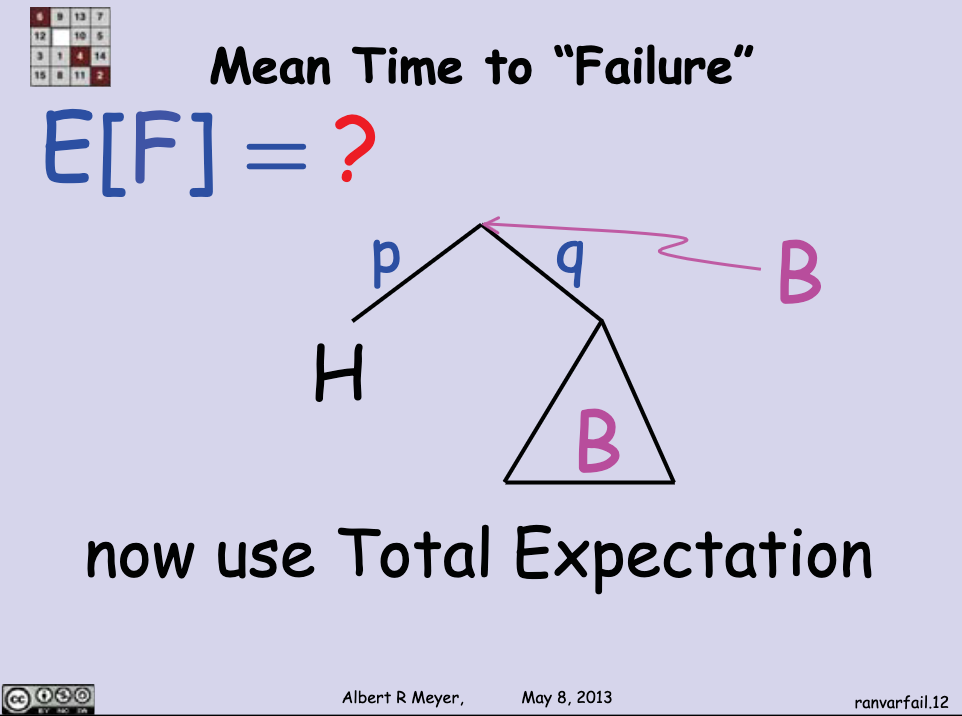

This tree is recursive, which means that we can replace a part of it by itself:

As the slide says, we can use Total Expectation to find , which is just the expected number of branches we have to follow until we reach an .

It will be (the expectation of given that the first one is a head) times (the probability of getting a head) plus (the expectation of given that the first one is a tail) times (the probability of getting a tail).

English can be confusing sometimes, so:

Well, the first part (the expected number of flips before getting a head on the first time) is just 1:

The expected number of flips until we get a head given that the first flip resulted in a tail is . Realize that after we get a tail, following the branch going through , we still have the tree itself. So, we already passed one branch (that's for ), and we're still expecting to get a head (that's for ), therefore .

Putting it all together, we have .

Simplifying it:

Factoring out :

Then:

can be written as :

So, the expected number of flips before we get a head is indeed .

Two things to note:

|

| Expectation is linear: |

| Let and be random variables, and and constants. Then, |

|

|

| For independent and , |

|