Intro to Discrete Probability

It doesn't matter how your relationship with probability theory was in the past, let's look at it with a fresh pair of eyes this time.

What we have in the first place is a set of random outcomes. Some portion of those outcomes (a subset) is an event. What we'll be looking for is the probability of an event:

Before looking at an example, we can keep in mind what is called a 4-part method:

- Identify the outcomes

- Identify the event

- Assign outcome probabilities

- Compute event probabilities

Now, let's look at an example from a practice exercise of the course.

Parker has two pairs of black shoes and three pairs of brown shoes. He also has three pairs of red socks, four pairs of brown socks and six pairs of black socks.

Now let's say that Parker chooses a pair of shoes at random and a pair of socks at random. We would like to know the probability of him choosing shoes and socks of the same color.

Let's construct a table to see what we have better:

| shoes | socks | |

|---|---|---|

| black | ||

| red | ||

| brown | ||

| TOTAL |

The first thing we need to do is to identify the outcomes.

In this case, an outcome is a pair of colors.

The second thing to do is to identify the event of interest, to find

The number of total outcomes is

The number of outcomes in the event is

Now, the third step is to assign outcome probabilities. We have to do it for everything: So,

| Probability of choosing a pair of... | |

| ...black shoes: | |

| ...brown shoes: | |

| ...black socks: | |

| ...red socks: | |

| ...brown socks: |

The final step is to compute the event probabilities to find the probability that he chooses shoes and socks of the same color.

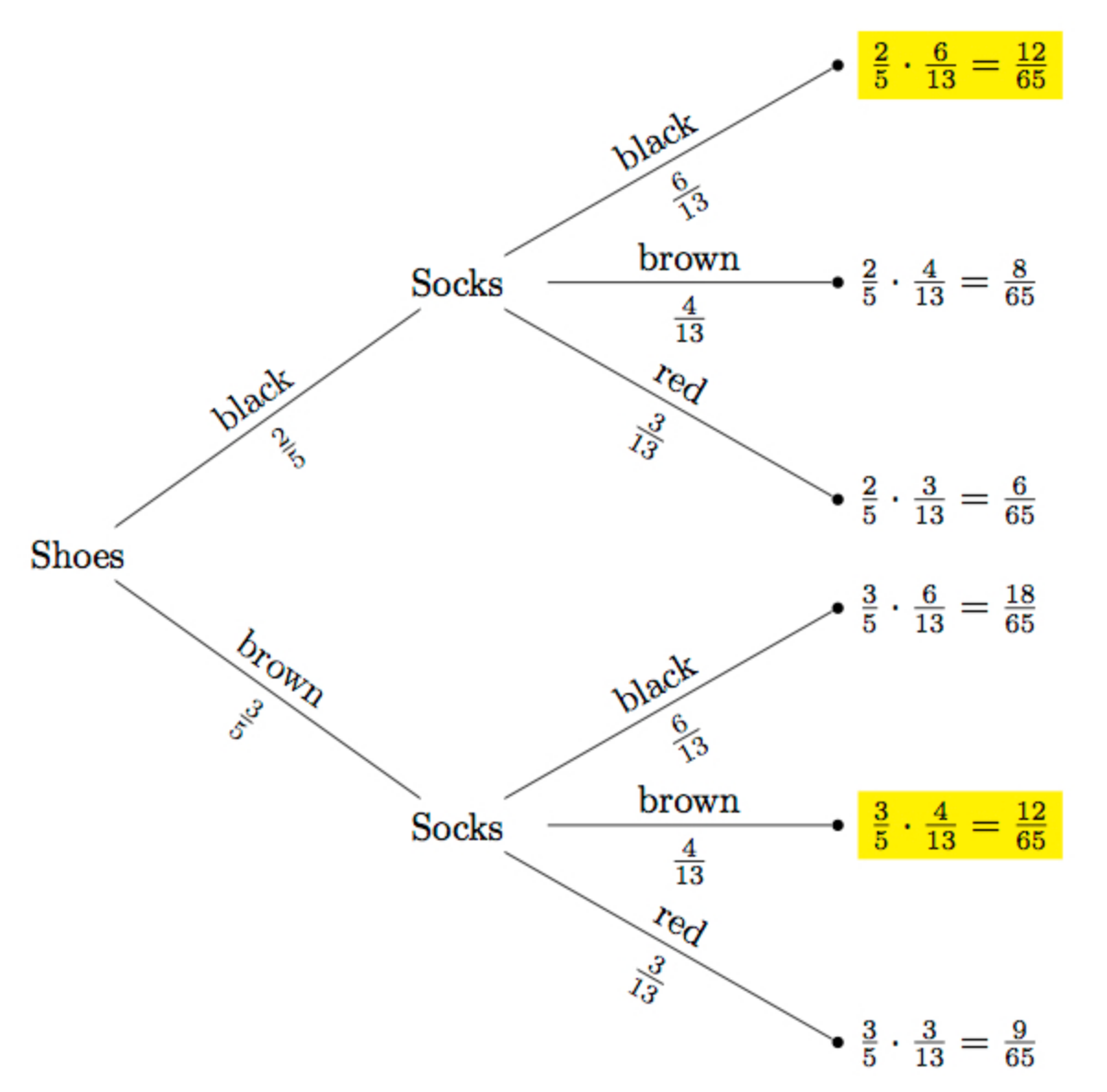

In order to do that, let's look at the probability tree that is constructed:

From Q4 Explanation.

Now that we see the probability of choosing black shoes and black socks is

So, the probability of choosing the same color of shoes and socks is

Let's look at something called probability spaces.

A probability space consists of two things: a sample space and a probability function.

A sample space is a countable set

A probability function is a function that assigns probabilities (ranging from

The sum of the probabilities of all the outcomes should add up to

Let

So, we should have a probability function

The reason of constructing the tree model was to find a probability space.

So, the leaves of the tree correspond to outcomes; and we calculate the outcome probabilities from branch probabilities.

An event is a subset of the sample space, some set of outcomes.

The probability of an event is the sum of the probabilities of all the outcomes in the event:

From that, we get what is called the sum rule: if we have pairwise disjoint events

Defined precisely:

For example, if we have three pairwise disjoint events

Then, the probability of any one of them occurring is

Now let's define some rules:

| The difference rule: |

| By the sum rule: |

| The inclusion-Exclusion: |

| This is similar to what we have seen in this section. |

| The union bound: |

| This can be understood from the inclusion-exclusion of two sets, clearly it is defined as the sum of the probabilities of two sets minus the probability of their intersection. So, it has to be less than the sum of their probabilities. |

| The monotonicity: |